Analysis of H-Net Router

2025-09-23

On this page

- Simplifying the ratio loss

- Alternative ratio loss ideas

- What’s the point of this generalization?

- The router’s binary entropy

- The confidence score STE

- Interpreting the “confidence score”

- Alternative “confidence score”-like heuristics

- Directly penalizing high entropy

- Is forcing low entropy a good idea?

- Cosine Q/K routing

- The Holy Grail: ‘natural’ gradients for the down/upsampling

Warning: This post won’t make any sense unless you’ve read the H-Net paper.

Tl;dr: Goomba ratio loss is basically just L2 loss on the target ratio. I simplify it. I then propose a generalization which I claim has some nice properties. I analyze the router module’s entropy and the target ratio’s stability in training. I propose a few other speculative ideas.

Simplifying the ratio loss

For simplicity in what follows define to be our sequence length, and that .

Goomba’s ratio loss is defined in the paper as

I find this form more intuitive:

Clearly the right term vanishes whenever or . Likewise, the partials with respect to or vanish when the other attains .

To be explicit,

The partial with respect to isn’t useful since we can’t backpropagate through . We can safely ignore it.

To better understand what’s happening here let’s derive our own loss function. Our goal is for to attain . If we could differentiate wrt then the simplest loss function would be an L2 loss

Differentiating with respect to gives,

If we let then we get

Notice the resemblance to the partials above.

Since isn’t actually differentiable let’s just fudge this and see what happens. Assume that holds well enough that gradient pressure on will optimize our . Let’s make our derivative partial with respect to instead

We can find a loss function which satisfies our fudge: the simplest way is just multiplying by .

Notice that this loss function has identical optimization behavior to Goomba’s loss.1

This fudging works because and are connected via the ‘s. Gradient pressure on just applies uniform gradients to each . Monotonic changes in will have corresponding monotonic effects on both and . We use gradients wrt to increase or decrease the ‘s proportional to how far is from target, up to some scaling.

Why that particular choice of ? I agree, let’s just choose something simple like .

By the same process we get

This is also identical in optimization behavior. It’s probably possible to tweak the constant to be some function of which makes tuning the coefficient more intuitive; it’s not clear to me that the Goomba constant does.

Here’s the equation to convert loss coefficients:

E.g., for and we have .

E.g., for and we have .

This recipe makes it easy to come up with other variants of their loss function. Want an L1, cubic, Huber, or CE loss on ? Just write down the loss you want on and its derivative with respect to and stick a factor on it.

But wait, you say. This loss function attains its minimum at and . So does the original Goomba loss (indeed also and ). But wait, isn’t a local minimum. Neither is it in the original Goomba loss (it’s a saddle point).

and are connected via the ‘s anyway so these degenerate minima aren’t possible. And, in any case, only matters for optimization.

Alternative ratio loss ideas

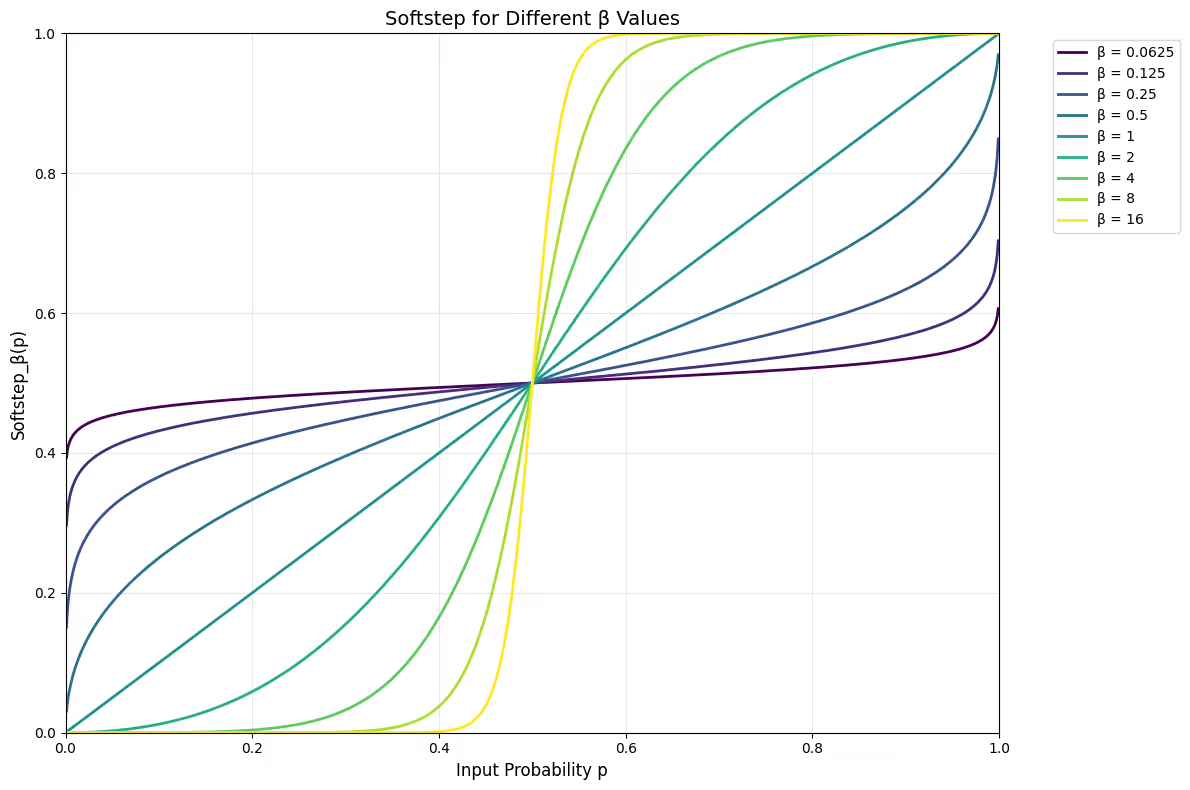

The “boundary” function can be smoothly approximated by a family of functions that sharpen/soften in logit-space and map back to probability space.

with where represents the inverse-temperature parameter.

Notice the following:

By analogy with we can define

Notice,

What happens when we optimize ?

Let’s look at :

Note that

Let .

And, assume there are positions in the sequence.

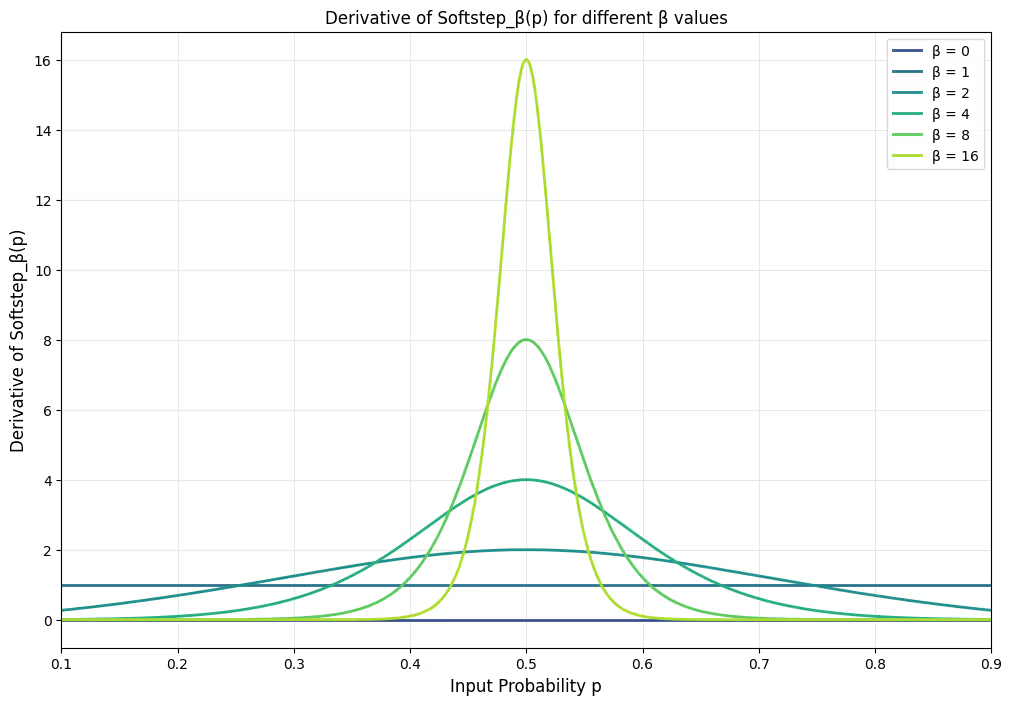

The only part here which depends on is . To understand this non-uniform gradient pressure let’s graph for different inverse temperatures

For moderate values we have a bump that’s centered around . You can see as it’s approaching a Dirac delta at .

For we have a horizontal line at : no gradients at all. For we have a horizontal line at : no effective dependency on . As we approach the Dirac delta: only the exact value receives any gradient (measure zero), so effectively we get no gradient. As above, and we know isn’t differentiable, so this checks out.

For with , we push with the same magnitude on the ‘s until .

For with , we push on ‘s until , harder near the threshold. This causes a bit of polarization while getting on target.

In practice, moderate inverse temperature values like seem to work fine.

Looking back at our gradient

Maybe we think the weight is nice and all, but in the end we want to get on target, not . For moderate inverse temperatures these won’t be exactly the same.

So, what if we wanted a loss that both uses the but also keeps us on the hard target, i.e., such that

We can achieve this easily using the same trick as :

Just as before, isn’t differentiable so its partial is irrelevant so only matters, and we get what we need.

Notice that this is a generalization of (and therefore a generalization of Goomba’s original ratio loss) since :

Values of just over allow us to nudge values away from .

If, for whatever reason, we actually did want to constrain too we could do,

This also works fine and just shapes the distribution of ‘s a bit more. Benefits untested/unclear. But again, since can just be written in terms of it makes you wonder why we stop shaping the distribution here. Why not use multiple values of ? E.g., suppose our highest is . Then we could do something overkill like

We’re doing the use-actual-hard--trick for the highest inverse temperature term and that last .

There might be some benefit to adding the target term to , but I think the above is indeed overkill.

What’s the point of this generalization?

In a selfish mathematical sense it’s nice to realize and as one abstraction.

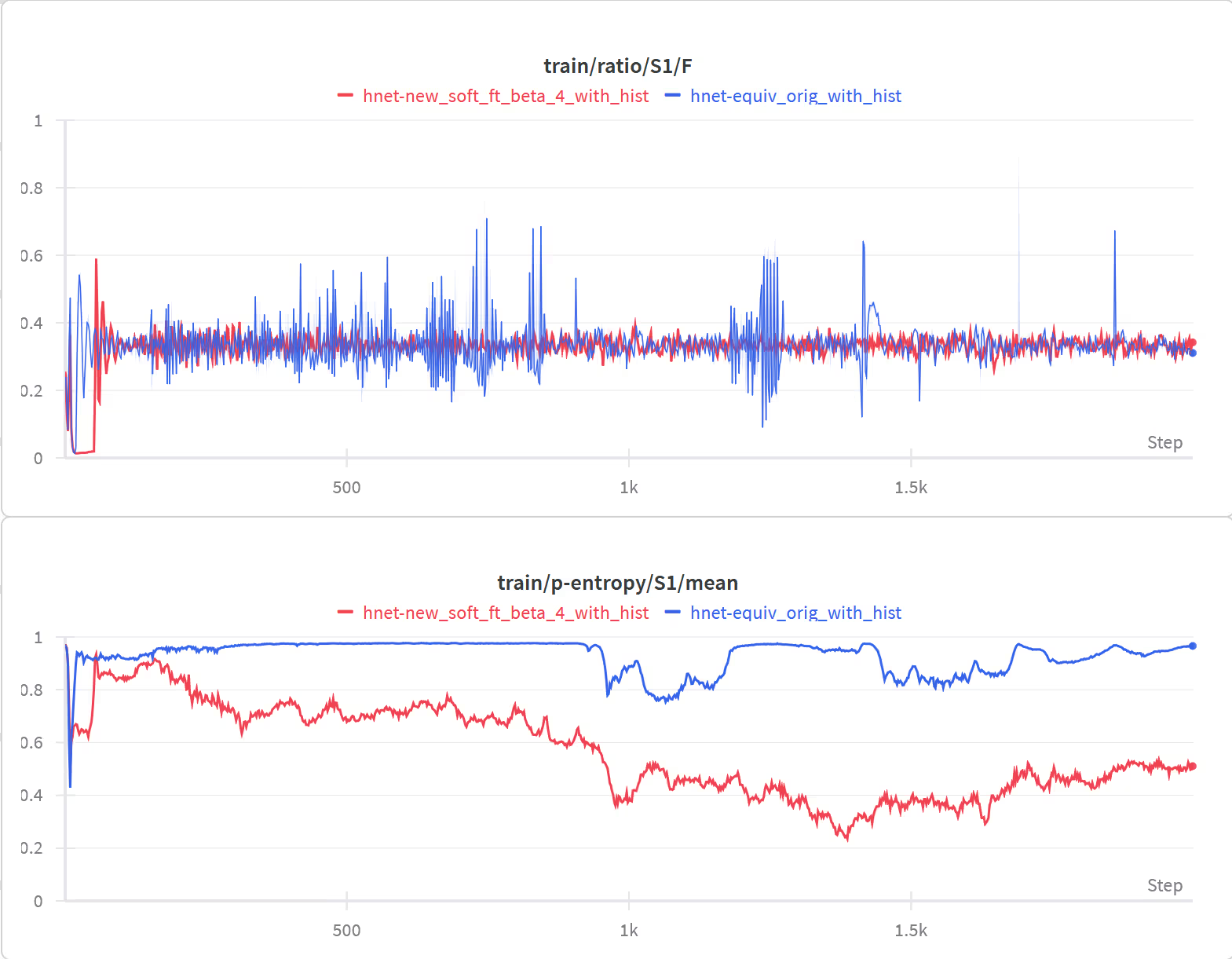

Practically, as mentioned, pushing harder on middling ‘s increases polarization. In my testing this manifests as moderately lower mean binary entropy and more stable ratio loss. I observe spikes in the original Goomba’s ratio loss (due to spikes in ). Fewer values straddling intuitively leads to more stable .

Here is a tiny example training run comparison of two 2-stage models; blue is Goomba loss and red is . The top graph is comparing the values for stage 1 and the bottom graph is comparing the mean binary entropy for stage 1.

If binary entropy decreases naturally as a result of training then this stability shouldn’t be a problem later in training. Fine, in that case you can use a larger like or in the beginning of training and decay it smoothly to at which point it becomes identical in optimization behavior to the Goomba ratio loss. But, does entropy naturally fall during training?

The router’s binary entropy

The paper claims:

Mechanistically, although is not differentiable, the network can be trained toward targeted compression ratios through , which provides continuous feedback.

When , the loss attains a minimum of when . Interestingly, the loss can theoretically fall below 1 when (e.g., and ), which we indeed observe during training. Despite this theoretical possibility, the loss effectively guides the model toward the desired compression ratio in practice. In practice, as our architectural design encourages the routing module to make confident decisions (i.e., boundary probabilities approaching 0 or 1), naturally converges toward , and the loss effectively guides the model toward the desired compression ratio.”

As mentioned, is not a minimum it’s a saddle point. And, as is clear from the form of their loss above, when or when . The gradient dies when attains its target; there is no gradient pressure from this ratio loss for to attain its target.

Let’s look at some actual stats for FineWeb-Edu on the checkpoints Goomba uploaded for hnet_1stage_L and hnet_2stage_L.

== H-Net batch setup (FineWeb-Edu) ==

batch_size: 256 max_len: 512 fineweb_name: sample-10BT

============ 2-stage ============

model_path: ./hnet_2stage_L.pt

config_path: ./configs/hnet_2stage_L.json

n_compress: 1-3-9

CE_loss (nats/token): 0.573152

bpb (bits/byte): 0.826884

ratio_loss (sum): 2.044173

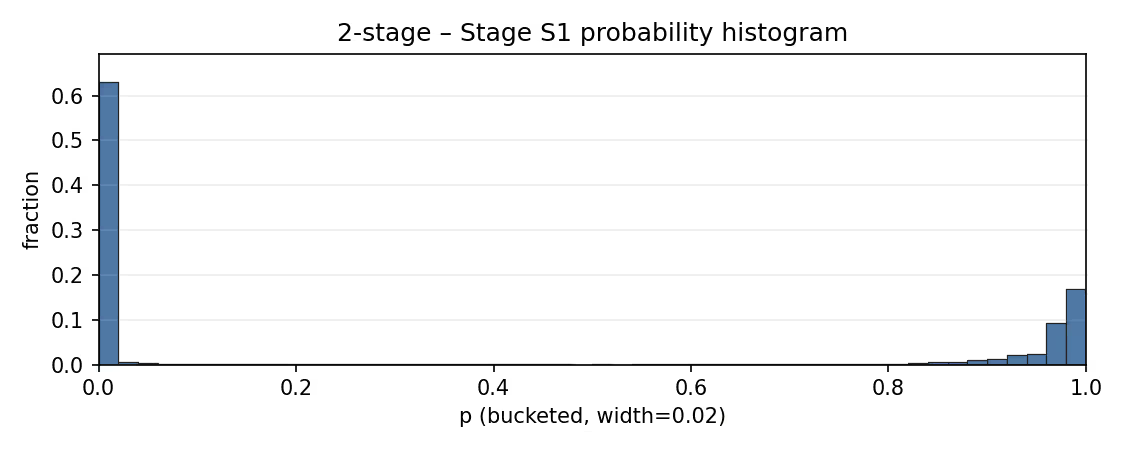

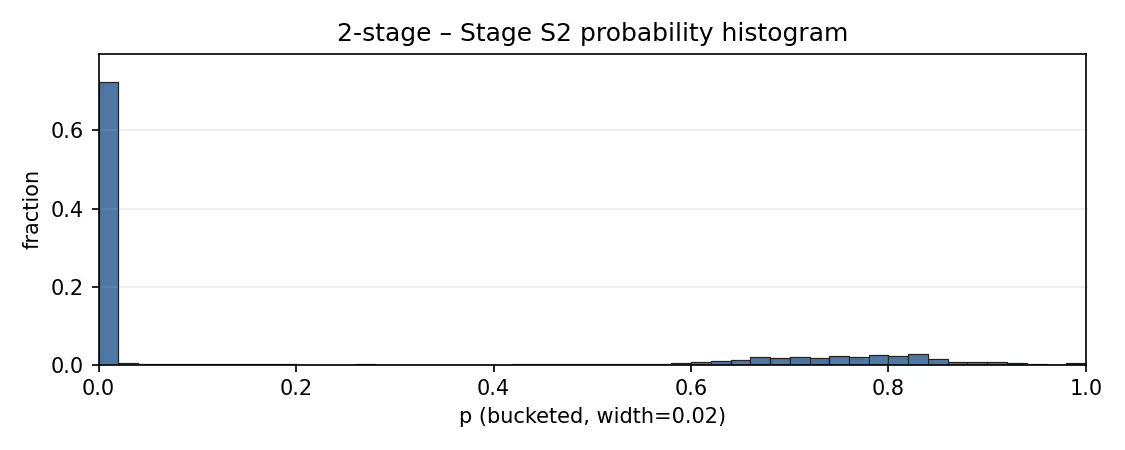

stage 1: F=0.350883 G=0.340252 ratio_loss=1.000546 H(p) mean=0.087364 bits var=0.024160

stage 2: F=0.258758 G=0.203334 ratio_loss=1.043626 H(p) mean=0.218104 bits var=0.120050

saved histogram: p_hist_2-stage_S1.png

saved histogram: p_hist_2-stage_S2.png

============ 1-stage ============

model_path: ./hnet_1stage_L.pt

config_path: ./configs/hnet_1stage_L.json

n_compress: 1-6

CE_loss (nats/token): 0.579933

bpb (bits/byte): 0.836667

ratio_loss (sum): 1.007449

stage 1: F=0.207146 G=0.192224 ratio_loss=1.007449 H(p) mean=0.087115 bits var=0.029150

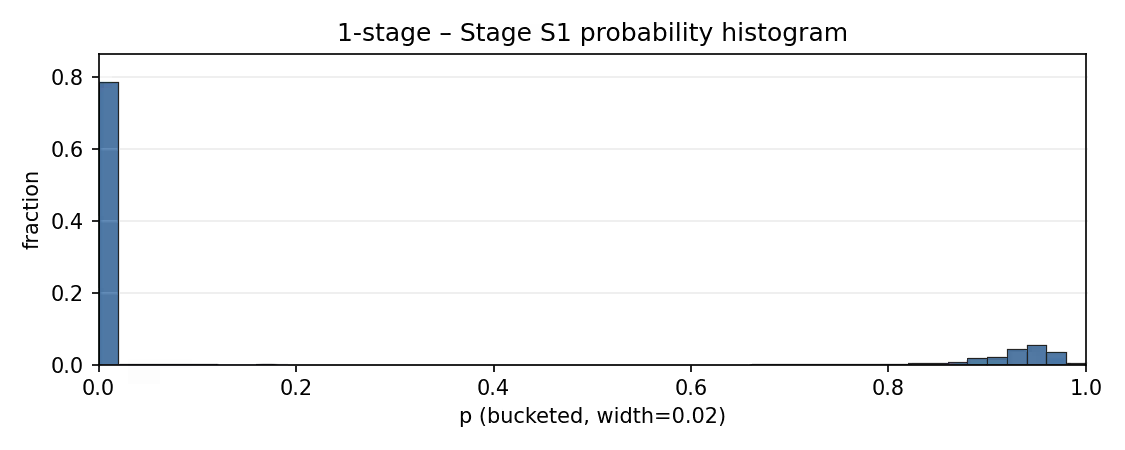

saved histogram: p_hist_1-stage_S1.pngDo we see here? Eh, kinda.

The 2-stage model has a target of for both stages: the first stage of the 2-stage model looks closest; its second stage isn’t very close.

The 1-stage model has a target of : it’s kinda close.

I included the mean and variance of the binary entropy of the ‘s. To understand what those “should” be let’s go over what happens when we optimize different quantities (not via SGD but just general optimization) subject to maximum mean binary entropy.

-

Optimize for . We get the trivial solution that all ‘s are , and therefore . Training collapses. In particular if then the mean binary entropy bits.

-

Optimize for . We get values which are and values which are . We get and the mean binary entropy approaches bit.

-

Optimize for both and . The situation here is slightly more complex. The maximum entropy solution has values at and values at . In particular substitute and . We get values at and values at for , and bits. This entropy is only slightly less than option 1.

In practice with short training runs I find that Goomba’s H-Net setup resembles situation 2 early in training: the ‘s cluster around giving high mean entropy. It’s clear that their ratio loss has no mechanism for decreasing entropy. A decrease in entropy must come from the other gradient pressure on the ‘s: the EMA smoothing in the upsampler and the “confidence”-STE.

The 1-stage Goomba checkpoint has H(p) mean=0.087115 bits

And the 2-stage Goomba checkpoint has H(p) mean=0.087364 bits on the first and H(p) mean=0.218104 bits on the second stage.

These entropies are much lower than what I observe in short training runs. For longer training runs like Goomba’s I’d like to know: how do these binary entropies drop over time? Is it sudden or gradual?

Is it fair to call these “confident decisions (i.e., boundary probabilities approaching 0 or 1)”? eh. You can see that stage 2 of the 2-stage model has a fair mass in the region with a mean entropy of bits. Noticeably all these stages seem to be much more confident around than , with significant mass in [.

The confidence score STE

Between the EMA smoothing in the upsampler and the “confidence scoring”, surely the latter is responsible for causing an entropy drop, right? I’m not so confident.

They define

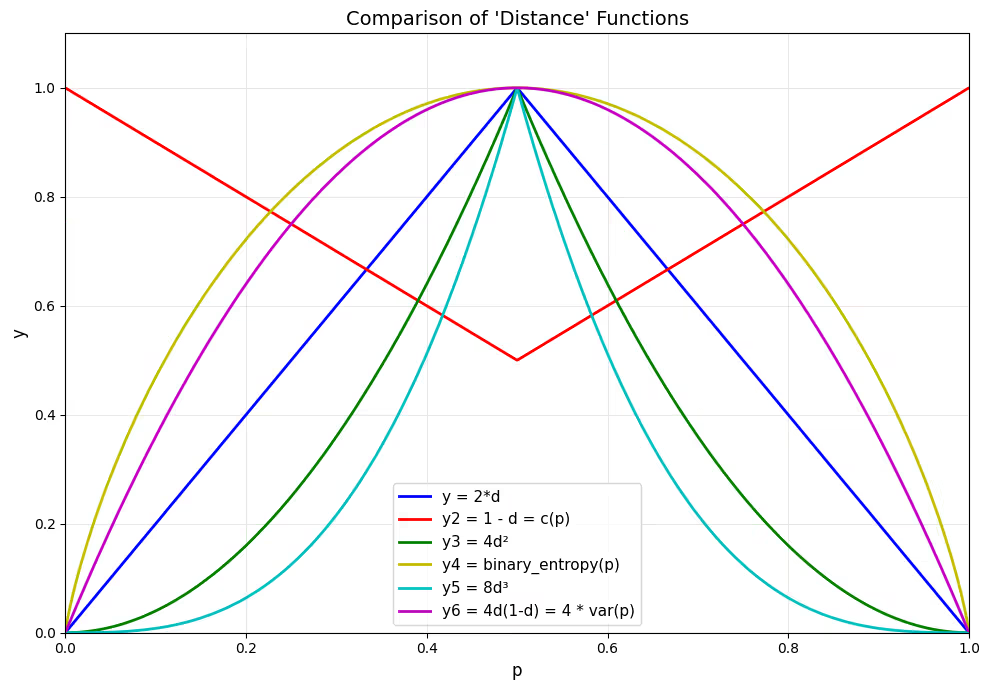

I’ll make my own definition, which we can call the “distance to boundary function” or just “distance function”. For a given it just gives the distance to or , whichever is less:

Then their confidence score can just be written as

The distance function has range and accordingly their confidence score has range . I find this definition simpler. More on this later.

In the upsampler, for each position , they scale the most-recent EMA-smoothed with . They use an STE such that appears to be in the forward pass. I.e.,

Let’s analyze the impact of this gradient on our ‘s. Let .

We have , so

Notice that the sign of can be positive or negative regardless of . So all four cases are possible here. Polarization of doesn’t obviously pop out of this.

What if we wanted a stronger gradient closer to (and a weaker gradient closer to boundaries)? That might actually cause some polarization. I.e., what if we wanted

In that case we can just take

with range . If we feel guilty about that range we can take

But, again, only the gradient actually matters. So let’s ignore the additive constants.

Now that we’re looking at it, why not tweak the coefficient on more thoroughly? This term doesn’t get used in the forward pass; the scaling on is analogous to a loss term coefficient. Goomba’s existing could just as well be written

for some positive coefficient . They used but I doubt that’s optimal.

Let’s write our variant as

I haven’t tested this; I’m just demonstrating that their choice isn’t obviously better than nearby alternatives. This should be tested.

Interpreting the “confidence score”

Is it fair to call this a “confidence score”? To me it seems to be a stretch given that we’re only using the function for its derivative. We just want . That’s it.

If we interpret the gradients of the ‘s I think it’s possible to get an intuition for what its real effect is. To be fully explicit, let’s exhaust all cases. For simplicity ignore since it’s measure and assume we’re talking about a 1-stage H-Net.

-

. This position is a boundary. The main network saw when it produced . Scaling the smoothed version, will either (locally) (net) decrease or increase the loss. Instead of scaling, we will interpret that as: decreases loss increase so this boundary position is more likely, increases loss decrease so this boundary position is less likely.

-

. This position is not a boundary. The main network saw the most recent boundary as a proxy instead, i.e. . Scaling the smoothed version, will either (locally) (net) decrease or increase the loss. Instead of scaling, we will interpret that as: decreases loss the proxy in this position is working out well (why ruin a good thing?) we should lower such that this position continues not being a boundary, increases loss the proxy in this position isn’t working well, let’s maybe give this position a chance to be a boundary.

This confidence STE is just a heuristic. We don’t actually have the counterfactual world where the main network saw anything other than the boundary position ‘s that it did.

Alternative “confidence score”-like heuristics

It’s easy to come up with other heuristics that sound just as plausible (if more complex to implement). Here are some ideas along those lines.

Notice that a position borrows from a previous boundary iff every position in has .

You could replace the pointwise gate tweak with a structure‑aware credit assignment that follows the model’s realized routing. Two rough ideas

-

Pool feedback over each decision’s “region of responsibility” then nudge the entry decision up or down while counter‑nudging the interior positions based on whether that region helped or hurt

-

Trace feedback token‑by‑token along the actual route taken, weighting each position by how much the route depended on it

As with the alternative confidence score function above, I haven’t tested these ideas yet but it seems reasonable to me that they could improve over the confidence STE.

Directly penalizing high entropy

Recapping the above with regards to entropy,

-

the Goomba ratio loss doesn’t decrease binary entropy

-

Early training has high binary entropies; I observe still after 3k steps.

-

will decrease entropy moderately early in training. For moderate ‘s I observe roughly to bits after a few thousand steps.

-

By the end of Goomba training some emergent combination of EMA + confidence-scoring-STE has significantly decreased the entropies. They’re still not drastically low e.g. bits.

An alternative we can try is to directly penalize high entropy. E.g., let

where is the binary entropy function.

Then we could introduce a new loss term

While this works mathematically, the gradients are largest near the boundaries (close to and ), which is counterproductive for our goal of polarization. We want exactly the opposite: strong gradients near and weak gradients near the boundaries. As an alternative we can use the square of the distance function from earlier.

Define:

This has zero gradients at the boundaries and maximal gradients near (the derivative is undefined exactly at ; not a problem in practice. 2)

Here’s a visualization of (comparably-scaled versions of) various related functions so you can compare their shapes. I included the confidence function from earlier and the Bernoulli variance too, for comparison.

In my experiments this regularization term works, even with very low weights like . Even if we only optimize e.g. with our ratio loss being with no pressure on at all the inclusion of this regularization will rapidly (few hundred steps) decrease the entropy to something like to bits, and will be on target. Interestingly, I observed that the second stage entropy decreases much more gradually. While it will also go on target fine in a couple hundred steps, the drop in entropy is much more gradual after that (whereas stage 1 dips precipitously).

If we find this regularization too aggressive we can apply a margin loss where gradient pressure is only applied if the mean value exceeds some value corresponding to a binary entropy of, e.g. bits. This also works, but similar to the above, the second stage is much more stubborn about dropping below the margin. In my experiment the first stage entropy dropped well below the target but the second stage was slightly high.

Is forcing low entropy a good idea?

It seems plausible to me that artificially forcing low entropy in the router could harm learning. For example, artificially forcing low entropy could plausibly harm learning by causing the router to prematurely commit to a suboptimal routing strategy. It also seems plausible that it could help learning via the stability benefits. Needs more experiments at larger scales than I can manage. And in the tiny runs that I’ve done using just I observe that the histogram of probabilities for the router is shifting around in shape drastically during training, despite the push towards moderate entropy. I predict that the moderate entropy drop early in training from using just will be net neutral or mildly positive for final loss. I think the stability benefits are a win.

Cosine Q/K routing

I find it hard to believe that the Mamba2 layers in the encoder can’t easily find the inductive bias of the cosine Q/K routing in the paper. In the ablations in section 3.3 they mention using a head for routing (probably linear or MLP) but say the wild fluctuations in compression ratio harmed performance. The graphs in Figure 7 support that.

Seems plausible to me that some of the techniques in this post could be used to stabilize a linear or MLP router.

The Holy Grail: ‘natural’ gradients for the down/upsampling

The EMA smoothing seems fairly intuitive and motivated to me. I can also accept the ratio loss (at least my generalization of it): a target compression ratio is reasonable. But, the confidence-score-STE is clearly a hack. Unfortunately, as shown in the ablations, it clearly helps the final loss. It seems very plausible that there are alternative hacks which work even better.

But, is there an HNet-like architecture which has a better down/upsampling design with ‘natural’ gradients that fall out cleanly from the definition of the forward pass? (Which also outperforms H-Net). This is less clear to me. After all, MoE’s are basically a hack and afaik no one has an ‘elegant’ alternative.

I hope we find out!